Changes for one?

In the last post we highlighted the importance of getting input from more than one person.

This post looks at a variation on that. We will often get input from various parts of an organisation which need different information and different verification. As a result, some tables will be important to one part of an organisation and not to others, and some columns in a particular table will be more important to one group of workers than they are to others. This we expect.

Imagine that one contact in a company says that a particular table should have 50 columns when all of your other contacts say that it should have 5 columns. The logic which demands 50 columns seems reasonable. What should you do?

Imagine that one contact in a company says that a particular table should have 50 columns when all of your other contacts say that it should have 5 columns. The logic which demands 50 columns seems reasonable. What should you do?

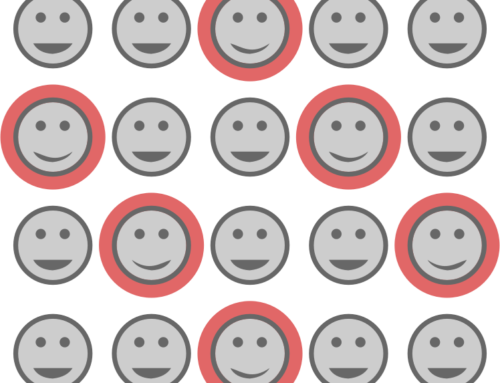

My recommendation is: Be careful not to add too much infrastructure or too many tables/columns for only one person. If others have not asked for them, leave them out. The person asking for the extra infrastructure may be completely correct. They may be a genius who is years ahead of everybody else in their company. But if they are, then let them convince the others in their company first rather than leading you off on a wild goose chase where the majority of your contacts will believe you have got it wrong. Once they have convinced the others in their company, no-one will be surprised when you add the extra columns.

Models always tend to grow, so don’t let one person change the size or scope of a solution markedly.

Aim for a “middle of the road” solution that is most likely to satisfy the most people while also being as simple as possible.

But what if the “one person” wanting changes is the data modeller or software developer? Here we have two common problems:

1. Just in case

2. Over-engineering

Let’s start with the first.

Just in case…

Everyone falls into this trap from time to time. I have done so far more times than I should have in various areas: mechanical equipment, software, data modelling and all sorts of administrative features. I can’t fully justify a pet preference, but I argue that it should be provided “just in case”. It is hard to limit our designs to only what is required now because we can always see possible changes that may be required in the near future. Extra columns. Extra tables. Extra indexes, triggers or validation. It’s hard to throw away that clever little extra idea.

Let’s have a quick think about this.

Imagine you add an unnecessary feature or data field “just in case”.

It may be needed in the near future, in which case you quickly announce its far-sighted availability, look remarkably clever and revel in the pats on the back you receive. Sadly, this is a rare situation.

But what are the other alternatives? What else can happen? Here are some suggestions:

(a) A small variation on your original idea is required. In this case, you are likely to keep your original feature (just in case) and engineer the slightly modified variation anew. Total saving: nothing. Risk: your original feature remains unused, left to confuse maintainers throughout the lifetime of the product or application.

(b) Other features are required, but yours is never needed. Your “just in case” feature was a waste of time and stays around to mislead maintainers for the remainder of the application or product lifetime.

(c) The need only arises some time later. By this time, you have moved on to another project or may have forgotten your clever provision of this feature. If you have moved on, the person trying to add the feature will see your existing feature, but will probably lack the confidence to use it for fear that there is some more complexity in it that they have not noticed. The feature will be added from scratch, woven carefully around your existing feature and possibly breaking it anyway.

If you are still around, but have forgotten your streak of brilliant forethought, you will still go through all the design necessary before you find or remember that you had already provided the required feature. As a result, the savings are minimal and there is, in fact, a good chance that your original feature will remain duplicated and intact but unused, living on to confuse maintainers even more.

Overall, the likely benefits of “just in case” solutions are non-existent. Extra, unused features almost always cost time, effort and money. The expected return on investment is normally negative. Every so often, you might hit the jackpot, but the odds are stacked against you. In the long run, providing only essential features is cheaper and safer for everyone. Unused features cost money.

Rule: Don’t add things “just in case”.

Over-engineering

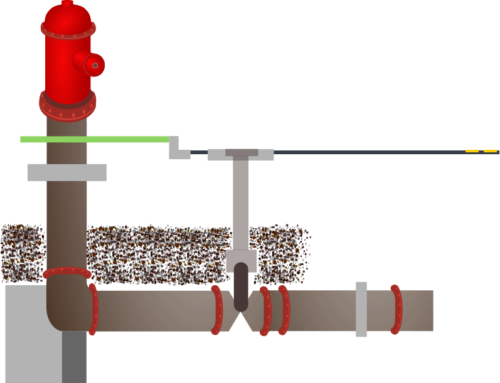

Quite a few years ago, I worked as a mechanical engineer for a water supply and sewerage corporation. At one stage, I was doing some quick reviews of the basic size of equipment in a pumping station, and I started to notice a consistent theme. There were more than twice as many pumps as needed for the design flow. The shafts which drove the pumps were roughly twice the size they needed to be for the maximum power that could be used by the pumps, despite the fact that there were also special couplings which would shear off if the maximum torque was exceeded. The motors were much more powerful than the rated maximum power for the pumps. And so it went on. Everything was larger than it needed to be.

Quite a few years ago, I worked as a mechanical engineer for a water supply and sewerage corporation. At one stage, I was doing some quick reviews of the basic size of equipment in a pumping station, and I started to notice a consistent theme. There were more than twice as many pumps as needed for the design flow. The shafts which drove the pumps were roughly twice the size they needed to be for the maximum power that could be used by the pumps, despite the fact that there were also special couplings which would shear off if the maximum torque was exceeded. The motors were much more powerful than the rated maximum power for the pumps. And so it went on. Everything was larger than it needed to be.

And the increases tended to be cumulative. The pumps needed a certain minimum shaft size, but a larger shaft was used. Because of the larger shafts, the bearings and couplings needed to be larger. With larger bearings, the lubrication systems had to be bigger as well, and all of this required more space in the equipment rooms. A larger crane was needed to lift the larger pumps and other equipment, and the steelwork in the building had to be stronger to carry the larger design load. Over-engineering for just one item caused many knock-on effects, not the least of which was the increased complexity that often comes with increasingly large equipment.

Can a data model suffer in the same way? I think the answer is “Yes.”

A few years after the events above, I was working on a software project where the zealous guardians of the database protected it from all sorts of “invalid data”. Constraints and triggers examined every insert, update or delete, waiting to pounce on any form of errant behaviour. Large quantities of data needed to be imported and one particular data import took 24 hours. We had our own copy of the database and the data to be imported, so we decided to disable and remove all the data checking, and suddenly the import took only 8 hours. To add insult to injury, real use of the data showed that many of the data checks were wrong and prevented proper manipulation of the data.

So what can we learn from these examples?

Over-engineering tends to spread like a bushfire. If it starts in one place, it will normally spread to other places. The best solution is not to let it start. If you add extra fields to give a “better” solution than the one required, you will probably add many more. If you add one field that can only have one possible value, but allows for multiple different values, you will be much more likely to do the same in other places – for completeness. If you make a field so that it can store data to five decimal places when you don’t need any, you may be straying down the over-engineering path, as well as the “just in case” path which we discussed in the last post.

Rule: Don’t over-engineer. The effects are cumulative and they take on a life and reason of their own.

Data models can be made over-complicated by adding fields that are not necessary. Excessive abstraction, over-generalisation and splitting data into multiple fields or even multiple tables can also represent over-engineering. Over-engineering often leads to under-engineered solutions – ones that do lots of things that aren’t needed, but don’t do everything that is needed. The above quote about simplicity is attributed by many to Albert Einstein and is quite correct. Don’t make things too simple, but make them no more complex than is necessary.

Thought: What valid use cases can properly test an over-engineered solution?

Leave A Comment