Data modelling is not the only limiter of performance.

When I first started working with databases, performance in all aspects of computing was much worse than it is now. However well an expert might craft a query, and however simple the query might be, technical restrictions would always limit the performance to just a few queries per second, at best.

Since that time, processors have progressed in leaps and bounds, memory is faster and more plentiful, hard disks store more and more and take less and less time to read or write it, and network connections are faster. Everything is quicker than it was. Even the increasing complexity of operating systems and other software has not been able to overrun the amazing improvements.

As a developer of software in earlier days, I found that queries had to be minimised and simplified as much as possible. Caching was essential, but had to be tuned so that the cache did not grow too large or else it would make everything grind to a halt.

When I last checked the performance of some of my software running on a fairly basic laptop, it was able to execute thousands of queries per second. The technical limitations that used to plague the development of complex database-driven software are simply not there any more.

How does this relate to performance?

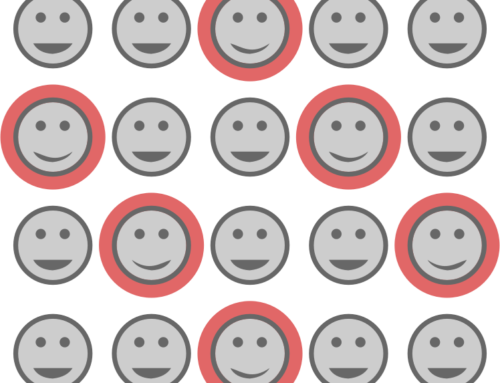

Well, exceptional power does not guarantee exceptional performance. Executing 50,000 queries in 10 seconds is terrible performance if only 1% of those queries were actually necessary!

The key to avoiding this is to know what your software is doing.

“Acceptable” or “Wonderful”?

Frameworks or toolkits can simplify a developer’s work, but they can also do unexpected things at times. Sometimes fetching a simple attribute of an object can take several queries, and fetching the same attribute for hundreds of objects can take thousands of queries because of the design or assumptions of the framework.

However, even with this sort of wasted effort, we may still find that the performance of our software is acceptable, as our hardware is so fast that ridiculous inefficiency is still able to be supported. So does it really matter?

My answer is that there is a big difference between “acceptable” and “wonderful” when it comes to performance.

If you develop software and you want to maximise performance, it is utterly essential to know and understand the queries your software makes. Find out how to log the queries being generated. Some software will allow you to record every query, or database drivers or the databases themselves will let you log the queries being executed. Examine them until you understand what they are all doing, then decide whether they are necessary or not. Don’t just leave it up to a framework to do all that work for you. All too often, you will find that many of the queries being executed are not necessary or are being done in an inefficient way. Maybe your software executes 50,000 queries when it should only use 500 – or maybe only one.

If you are a user of technical, data-driven software, I would recommend doing the same tests. You may be surprised at what will show up. Unfortunately, even if your supplier has checked these things in the past, software that is still being developed has a habit of degrading in this area. Constantly improving hardware helps to conceal this. New features are added and new queries come along with them. Some changes have unintended consequences, so it is still worth keeping an eye on the numbers of queries being performed for various tasks.

How many queries?

Once when I was working on some database-driven software, a customer complained that the software was “hanging”, and they were having to kill the process. Investigation showed that, in actual fact, the software was still working, and the user was interrupting a series of queries which would have successfully completed if they had waited. How long would it have taken? More than 37 hours by my estimates – once the millions of queries being executed were complete. It was another case of doing several queries for each row fetched from a table, but in this case there were millions of rows being fetched. The final insult was that most of the data wasn’t necessary anyway and was being immediately thrown away!

After a few hours’ work, I was able to reduce the time taken to a few minutes, but the improvement only came from looking at the queries that were being executed and understanding why they were happening. Getting rid of most of the queries was always going to be the only way to fix the problem.

When software is executing very large numbers of queries, this is likely to be only possible solution: get rid of them.

How? Why would queries ever be done if they weren’t necessary?

Why so many queries?

Here are five typical reasons:

- Queries are often executed just to check the existence of rows, particularly if your objects live in more than one table. This is simply testing to make sure that the stored data is valid, and is a heavy cost for each user to bear every time they use the software. Some will probably disagree with me, but I think that such checking is an unnecessary waste of time. Instead, I would recommend creating separate procedures for making sure that data is valid, then ensuring that those checks are run as often as necessary to make certain that the chance of invalid data is very small.

- Sometimes objects are fetched using several queries each, instead of using a single query to fetch all of the rows needed. Executing one or more queries for each row and fetching the results takes more time and demands more resources than simply executing a single query and then fetching all the rows returned from that single query. The single query will sometimes end up more complex, but the reduction in the number of queries required is almost always worthwhile. Typically we are looking to execute a single query that joins two or more tables and fetches all the fields we need for each row that we need from the tables.

- Queries are sometimes done “just in case”. The information may be needed later, the argument goes, so why not fetch it now while we are fetching other things? This may be either valid or wasteful, depending on the situation, but in general, fetching things “just in case” is wasteful.

- Sometimes the information is fetched but not stored, meaning that when it is required at more than one stage of a process, it must be fetched each time. This is a question of software design and efficiency. If extra information must be stored in the software, the program will consume more memory, but this is probably still worthwhile if speed is of the essence.

- Re-entrancy or recursion – sometimes software components can be chained together in a way that causes a query to be generated once and then generated again before the results are stored. This can cause exactly the same query to be generated more than once – unnecessarily.

Conclusion

The speed of modern hardware can allow inefficiencies to go almost unnoticed. Counting the number of queries software performs for various operations is valuable. You may be shocked how many queries your software performs.

Leave A Comment