In a database, good performance is vital.

So, how can we be sure we will have good performance in a project? What will make a data model good for performance or bad?

And what is good performance anyway?

Let’s start by defining good performance.

Good performance – for software or databases – is when someone wants to access and manipulate data and never feels as if they should be able to do it faster than the software allows them to.

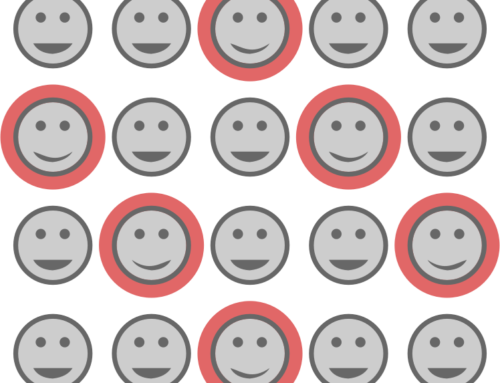

Of course, users aren’t always completely reasonable. Nevertheless, they will be the ones who decide whether your work provides performance that is good or bad. So don’t let performance be an afterthought – design good performance in from the start. If you leave it until the end, you may require significant changes to a data model to achieve the performance required.

Three 80/20 rule applications relating to performance (don’t take the 80 or 20 numbers too literally):

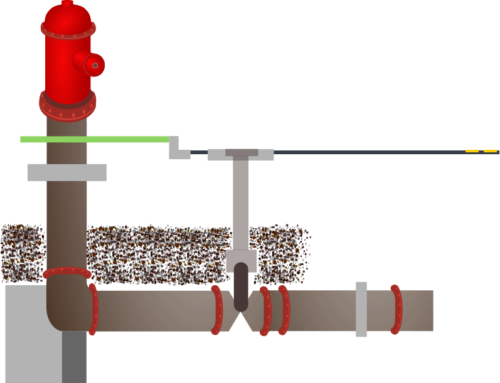

- In general, 80% of the use of your data model will use only 20% of the features your data modelling makes available. Your data model design must make sure that these features can be blindingly fast. If necessary, it’s even worth spending 80% of the time you spend on performance optimisation optimising these features. Whether it be adding database indexes, tweaking queries or denormalising/duplicating data, do whatever is necessary to achieve excellent performance for those features.

- If 80% of a user’s interaction with the data you are modelling is fast enough, then they will probably tolerate the other 20% (as long as the 20% doesn’t eat up 80% of their time!).

- About 80% of the performance improvements achieved will probably come from 20% of the tweaks that are made.

Two final points

- Bad performance will often cause a project to fail so avoid it from the very beginning.

- Good performance will often not be noticed. In the realm of performance, an absence of criticism is almost as good as praise.

Leave A Comment